Sorenson Video 1

This document is based on the document 'Description of the Sorenson Vector Quantizer #1 (SVQ1) Video Codec by Mike Melanson and Ewald Snel found at http://multimedia.cx/svq1-format.txt.

- FOURCCs: svq1, SVQ1, svqi

- Company: Sorenson Media

- Samples: http://samples.mplayerhq.hu/V-codecs/SVQ1/

- Patents: US #5,844,612, "Motion vector quantizing selection system"

Introduction

Sorenson Vector Quantizer #1 is identified by the four-character code SVQ1. This FOURCC will be used throughout this document to identify the codec.

SVQ1 is primarily used to encode video in Quicktime files. The format was commonly seen from 1998-2002 before its successor, SVQ3 (which is based on completely different coding concepts), was rolled out. The algorithm uses hierarchical adaptive multistage vector quantization with mean removal and motion compensation in order to achieve its compression.

The SVQ1 patent listed in the references is a good source for understanding both the encoding and decoding process. This document focuses on principles necessary to implement a SVQ1 decoder.

This document assumes familiarity with certain general and video-specific coding concepts such as Huffman (a.k.a. variable length) coding, YUV colorspaces, inter/intraframe coding, and motion compensation. A familiarity with vector quantization is useful, but this document will explain what you need to know about the particular flavor of VQ that SVQ1 uses.

Algorithm Basics

SVQ1 operates on vectors (think blocks) of pixels. At the highest level, the algorithm breaks an image into 16x16 blocks of pixels, or 256-pixel vectors. For each vector, the encoder performs a motion search (if the frame is not a keyframe), mean removal, and multi-stage codebook search through a series of pre-defined codebooks. If the encoder finds a good match for the vector after one or more of these operations, then it outputs the proper bit coding for that vector. Otherwise, the 16x16 block is broken in half into 2 16x8 blocks (128-pixel vectors) and the same operation is repeated (minus the motion search, which only operates on the 16x16 vector level). SVQ1 operates at 6 block sizes:

16x16 16x8 8x8 8x4 4x4 4x2

If the encoder has to break a 4x4 block into 2 4x2 blocks, the block cannot be subdivided further and must be encoded as is using mean removal and a combination of vector codebooks.

SVQ1 uses YUV 4:1:0 planar data as its native colorspace. This is also known as YUV9. Each 4x4 block of pixels is represented by one Y sample per pixel, but only 1 U sample and 1 V sample for the entire block.

The SVQ1 coding method embodies 3 types of frames: Intraframes, or I-frames, which are coded completely by themselves with no data dependencies from other frames; predicted frames, or P-frames, which are predicted from the previous I- or P-frame and thus have a data dependency on the previous I- or P-frame; and droppable P-frames, or B-frames, which are predicted from either the previous I- or P-frame and thus have a data dependency. However, the latter frame type is similar to MPEG B-frames in the sense that other frames cannot predict from them, so it is safe to drop them when decoding is behind schedule. SVQ1 B-frames are dissimilar to MPEG B-frames in the sense that they are unidirectional and not bidirectional.

Stream Format And Header

A single SVQ1 chunk has the following high-level organization:

header | Y plane data | U plane data | V plane data

Each SVQ1 chunk begins with a header which can be a variable number of bits in length. The header bits have the following meaning:

frame code 22 bits

if (bits 6-5 of frame code are both 0 (i.e., frame code & 0x60 == 0))

frame is invalid

temporal reference 8 bits

picture type 2 bits

if (picture type is I-frame)

if (frame code is 0x50 or 0x60)

checksum 16 bits

if (frame code ^ 0x10 > 0x50)

embedded string n bits

unknown 2 bits

unknown 2 bits

unknown 1 bit

frame size code 3 bits

if (frame size code is 7)

frame width 12 bits

frame height 12 bits

checksum present 1 bit

if (checksum present)

use packet chksum 1 bit

component chksums 1 bit

after image data

unknown_field_1 2 bits

if (unknown_field_1 is not 0)

invalid frame header

unknown_flag_1 1 bit

if (unknown_flag_1)

unknown 1 bit

unknown 4 bits

unknown 1 bit

unknown 2 bits

while (next bit is 1)

read a byte from the stream

True, many of the header fields are unknown. But the stream can be decoded just fine with the known header fields. The picture type has the following 4 possible values:

0 I-frame 1 P-frame 2 B-frame 3 invalid frame code, do not proceed with frame decode

If the frame size code is 7, 12 bits are stored in the header for each of the width and the height. If the frame size code is any of the other 7 possible values, the frame size is one of these common frame dimension pairs:

code width height 0 160 120 1 128 96 2 176 144 3 352 288 4 704 576 5 240 180 6 320 240

A SVQ1 chunk can have a message string embedded inside the chunk header. If (frame code ^ 0x10 > 0x50), follow this algorithm to decoder the string:

string[0] = next 8 bits xor_byte = string_xor_table[string[0]] foreach i from 1..string[0]-1 next_byte = next 8 bits string[i] = (next_byte) ^ xor_byte xor_byte = string_xor_table[next_byte]

Note that since string[0] holds the string length and is a byte, the maximum string length is 255 characters.

The specific details of the checksum coding are not all known.

Decoding Intraframe Plane Data

After the frame header is decoded, the bitstream contains all of the encoded data to render the Y plane, then the data for the U plane, then the V plane.

For the purposes of this description, each block size is assigned a level:

level 5 16x16 level 4 16x8 level 3 8x8 level 2 8x4 level 1 4x4 level 0 4x2

For each of the 3 planes in an intraframe, iterate through each 16x16 block, which are each level 5 blocks, from left->right, top->bottom.

processing a level 5 block:

get next bit in stream

if (bit is 1)

subdivide level 5 block into 2 level 4 blocks

insert top level 4 block into processing queue

insert bottom level 4 block into processing queue

else

process block, which entails:

fetch VLC from bitstream using the appropriate intra vector table;

this specifies the number of VQ stages

if stages is -1, skip this block

if (stages > 0) && (level >= 4)

invalid vector, error out of decode since levels 4 and 5 blocks do

not use multistage VQ

fetch VLC from bitstream using the intra mean table; this specifies

the mean removed from the vector during encoding

if (stages == 0)

set each sample in block to the mean value

else

fetch the next (stages * 4) bits from the bitstream; these specify

the codebooks to use in reconstructing the image; the first 4 bits

specify the first codebook to use, the next 4 bits specify the

second codebook, all the way up to a sixth codebooks for 6

possible stages

add the mean to the each value in the block

for each stage

add the appropriate codebook to the block

If the level 5 block was subdivided into 2 level 4 halves, process each half using the following algorithm:

processing a level 4 block:

get next bit in stream

if (bit is 1)

subdivide level 4 block into 2 level 3 blocks

insert left level 3 block into processing queue

insert right level 3 block into processing queue

else

process block the same way that a full level 5 block is processed, but

use the appropriate level 4 VLC tables and codebooks

If any level 3 blocks were inserted into the processing queue as a result of level 4 block subdivisions, process each level 3 block in order using this algorithm:

processing a level 3 block:

...

By now, you should be recognizing a pattern, e.g.:

process_block(level)

if (next_bit == 1) subdivide block into 2 (level - 1) halves insert first half into processing queue insert second half into processing queue else ...process block...

Where does it end? The function requires a base case where the processing can go no further. In this algorithm, blocks cannot be subdivided beyond level 0 (4x2 samples):

process_block(level)

if (level > 0) && (next_bit == 1) subdivide block into 2 (level - 1) halves insert first half into processing queue insert second half into processing queue else ...process block...

Thus, for each block, the entire block is processed as a level 5 block and possibly subdivided into top and bottom halves for processing after the level 5 block is processed. Suppose a level 5 block is subdivided and that the resulting top level 4 block is further subdivided while the bottom level 4 block is processed by itself. The processing can be represented as a tree:

--- 5 ---

| |

-- 4 -- 4

| |

3 3

The SVQ1 algorithm processes each level of this tree in order from 5 down to 0. In the above example, the decoder would unpack the information for the level 5 block, then each level 4 block, then each level 3 block, before moving onto the next level 5 block. This is known as a breadth-first tree traversal, as opposed to a depth-first traversal which would process each of the 2 halves of a block subdivision before moving onto the next block in the same level. The following abstract information would be used to represent the level 5 block in the above example:

1 (subdivide level 5 block)

1 (subdivide top level 4 block)

0x (0 = no subdivision, x = removed mean and multistages for bottom

level 4 block)

0x (0 = no subdivision, x = removed mean and multistages for left

level 3 block)

0x (0 = no subdivision, x = removed mean and multistages for right

level 3 block)

Note that if the processing tree subdivides to level 0, there will be no 0 in front of the mean and multistage information for the block since the block can not be subdivided further.

As an example of the processing steps needed to reconstruct an intraframe block, consider an example where the decoder has recursed down to the base case of level 0. Fetch a VLC from the bitstream using the intra multistage VQ table. For example, the retrieved VLC is 2 (from a range of -1..6). This indicates mean removal plus 2 stages of VQ. Extract a VLC from the bitstream using the intra mean removal VLC table. For this example, the removed mean is 61. Extract 8 more bits from the bitstream, 4 for each of the VQ stages. The first 4 bits indicate the stage 1 codebook and the second 4 bits indicate the stage 2 codebook. For this example, stage 1 = 4 and stage 2 = 14.

base intra vector = 0 0 0 0 0 0 0 0

removed mean = 61

stage 1, codebook #4 vector for level 0 vectors: 7 -16 -10 20 7 -17 -10 20

stage 2, codebook #14 vector for level 0 vectors: -13 -6 -1 -4 25 37 -2 -35

First, add the mean to each element of the base vector:

61 61 61 61 61 61 61 61

Then, apply the stage 1 codebook:

68 45 51 81 68 44 51 81

Then, apply the stage 2 codebook:

55 39 50 77 93 81 49 46

This is the final 4x2 block of samples that will go into the output.

A note about wraparound/clipping/clamping/saturation, etc.: At each stage of the addition or subtraction, the result of saturated to an unsigned byte range, 0..255.

Decoding Interframe Plane Data

Interframe decoding allows the SVQ1 encoder to use existing blocks of data from the previous frame as references to encode current blocks (motion compensation), encode blocks in the same manner as in an intraframe, or just skip blocks altogether if they remain unchanged from the previous frame. Interframes are either P- or B- frames and use data from the previous I- or P-frame, but never the previous B-frame.

For each of the 3 planes in an interframe, iterate through each level 5 block, from left->right, top->bottom. Each level 5 block has one of the following coding modes:

0 SKIP: the block remains unchanged from the previous I- or P- frame

1 INTER: perform the INTRA process using a motion block from the

previous frame

2 INTER_4MV: same as INTER but use 4 motion vectors instead of 1

3 INTRA: the block is decoded using the same process described in the

previous section

SVQ1 motion compensation operates in two modes. One mode operates on an entire 16x16 block (INTER mode). The second mode splits a block into four 8x8 subblocks and each subblock has a motion vector (INTER_4MV mode). The motion vectors always operate on a half-pixel grid, similar to MPEG-2.

A motion vector is predicted from the previous left, top, and top-right motion vectors, if the vectors are available and coded within the current frame. A vector of (0,0) is used in place of any unavailable vectors. The predicting x motion component is the median value of the x components of the 3 candidate vectors. The predicting y motion component is computed the same way using the 3 candidate y components. In the case that only one vector is available, that vector becomes the predicting vector. Of course, if no vectors are available, then the predicting vector is (0,0) which will be the same as (x,y) once the motion components are decoded.

For example, if this is the first block of a slice which is not 0, then the previous left vector, pl(x,y), is (0,0). Assume that the previous top (pt) and top-right (ptr) vectors are both coded within the current frame. pt(x,y) = (5,17) and ptr(x,y) = (-9,12):

for x, MEDIAN(0,5,-9) = 0 for y, MEDIAN(0,17,12) = 12

Thus, the predicting motion vector is (0,12). From here, the x motion component for this block is decoded from the stream and added to 0, followed by the y component which is added to 12. (0+x,12+y), clipped to a range of -32..31, is the final motion vector.

An individual motion vector component is coded as a VLC magnitude followed by a sign bit. If the next bit of the bitstream is 1 then the motion component is 0. Overall, the algorithm for motion vector component extraction resembles the following:

if (peek_bit(bitstream) == 1)

motion_component = 0

skip_bit(bitstream)

else

motion_component = get_vlc(bitstream, motion_component_vlc_table)

if (get_bit(bitstream) == 1)

motion_component = -motion_component

Each 8x8 block in a frame corresponds to a motion vector. When a motion vector is decoded for an INTER mode block, the motion vectors for the 4 constituent 8x8 blocks in the 16x16 block are all set to that same motion vector. If a 16x16 block is decoded with either the INTRA or SKIP mode, the motion vectors for its 4 8x8 blocks are reset to 0, which is equivalent to indicating that the blocks were not coded in the current frame. In the case that a 16x16 block is decoded using the INTER_4MV mode, each of the 8x8 blocks ends up with a different motion vector.

If the current block is coded as INTER and only uses one motion vector for the entire 16x16 block, the motion vector is predicted from the previous left 8x8 block, and the previous top and top-right motion vectors for 16x16 blocks. To clarify, consider the following example in which each character in the grid represents a 8x8 block:

A B C D E G H I J K M N 1 2 Q S T 3 4 W

[1 2]

The [3 4] 16x16 block will use block N as previous left, block C as previous top, and block E as previous top-right.

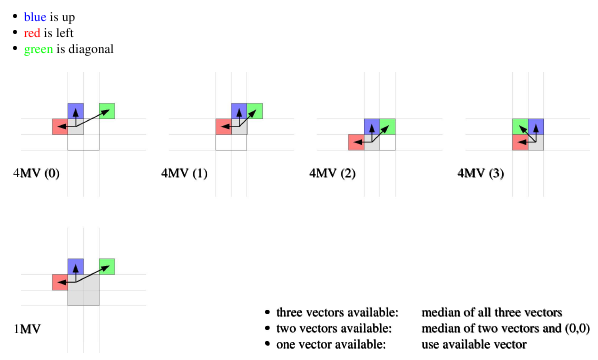

In the case of INTER_4MV mode, each of the 4 8x8 blocks gets a different motion vector. Follow this table as an example:

Predicted Motion Vector

L T T-R

+--------------

1| N I K

Block 2| 1 J K

3| T 1 2

4| 3 2 1

For block 3, the top-left motion vector is used rather than the top-right motion vector.

Note that the motion vector for block 1 should be predicted and its differential motion components decoded from the stream, applied, and the resulting motion vector saved, all before decoding the motion vector for block 2. The 4 motion vectors need to be fully decoded in series since they depend on each other.

Once the motion vector is fully decoded and the reference 16x16 block is copied from the appropriate block from the previous frame to the current block in the current frame, repeat the same familiar intraframe decoding process. The only difference is that you will add the codebook vectors and removed mean to the interframe block rather than to a block of zero samples.

The following is a graphical depiction on the motion vector prediction logic used in SVQ1:

Appendix A: SVQ1 Data Tables

This is a listing of all of the data tables necessary to encode/decode SVQ1 data.

VLC tables:

- interframe block coding types: This table is used for decoding the coding mode for each level 5 block in an interframe.

- motion vector component table: This table is used for extracting differential motion component magnitudes from the bitstream. The values from this table range from 1..32 (0 is a special case separate from the table).

- intraframe mean removal tables:

- interframe mean removal tables: These tables contain removed mean values. The intraframe mean removal table ranges from 0..255 while the interframe table ranges from -256..255.

- intraframe multistage VQ tables, one for each block level:

- interframe multistage VQ tables, one for each block level:

These tables describe how a block is coded. * The values in these tables range from -1..6:

- -1: skip block

- 0: block is coded as mean-only (no multistage VQ)

- 1..6: block is coded as mean plus 1..6 stages of VQ

- Codebooks:

- intraframe vector codebooks, one for each level 0..3, 16 for each of 6 stages:

- interframe vector codebooks, one for each level 0..3, 16 for each of 6 stages:

These tables contain a large number of signed bytes that are applied to vector levels to reconstruct vectors for a final output frame.

- Other:

- checksum table: This table contains values for calculating checksums over the encoded and decoded SVQ1 data.

- string XOR table: This table contains logical XOR values used to obfuscate strings embedded within SVQ1 frame headers.

All of these data tables can be found in the CVS source repository for the ffmpeg project: http://svn.mplayerhq.hu/ffmpeg/trunk/libavcodec/svq1_cb.h http://svn.mplayerhq.hu/ffmpeg/trunk/libavcodec/svq1_vlc.h http://svn.mplayerhq.hu/ffmpeg/trunk/libavcodec/svq1.c

References

Motion vector quantizing selection system U.S. Patent #5,844,612 Inventors: Israelsen; Paul D. (North Logan, UT) Assignee: Utah State University Foundation (North Logan, UT)

Quicktime.qts mega-DLL: http://www.apple.com/quicktime/